Introduction

Just a brief recap from last week’s blog post, we covered:

Introduction to Terraform:

I began using Terraform for AWS in 2017 and expanded to IT services like Okta after its Terraform provider was released in 2019.

Challenges with Legacy Terraform:

- Inflexible, complex code designed for specific infrastructure.

- Scaling issues addressed via custom scripts.

- Single pipeline for all resources and confusing workflows.

Solutions Implemented:

- Workspace Segmentation:

- Divided into Core (critical automation) and Standard (team-specific features).

- Development cycle: Local → Preview → Production.

- Branch protections for stability.

- Scalable Code:

- Dynamic, flexible, and heavily documented.

- Handles drift intelligently (corrects critical drift, tolerates controlled drift).

- Monitoring & Alerts:

- Dashboards for drift detection, resource states, and run metrics.

- Automations for re-applying Terraform on drift.

Implementation Plan:

- The seven-part series covers planning, workspace segmentation, automation, and security policy management.

Community Resources:

- Engage with the

#okta-terraformMacAdmins Slack channel for support.

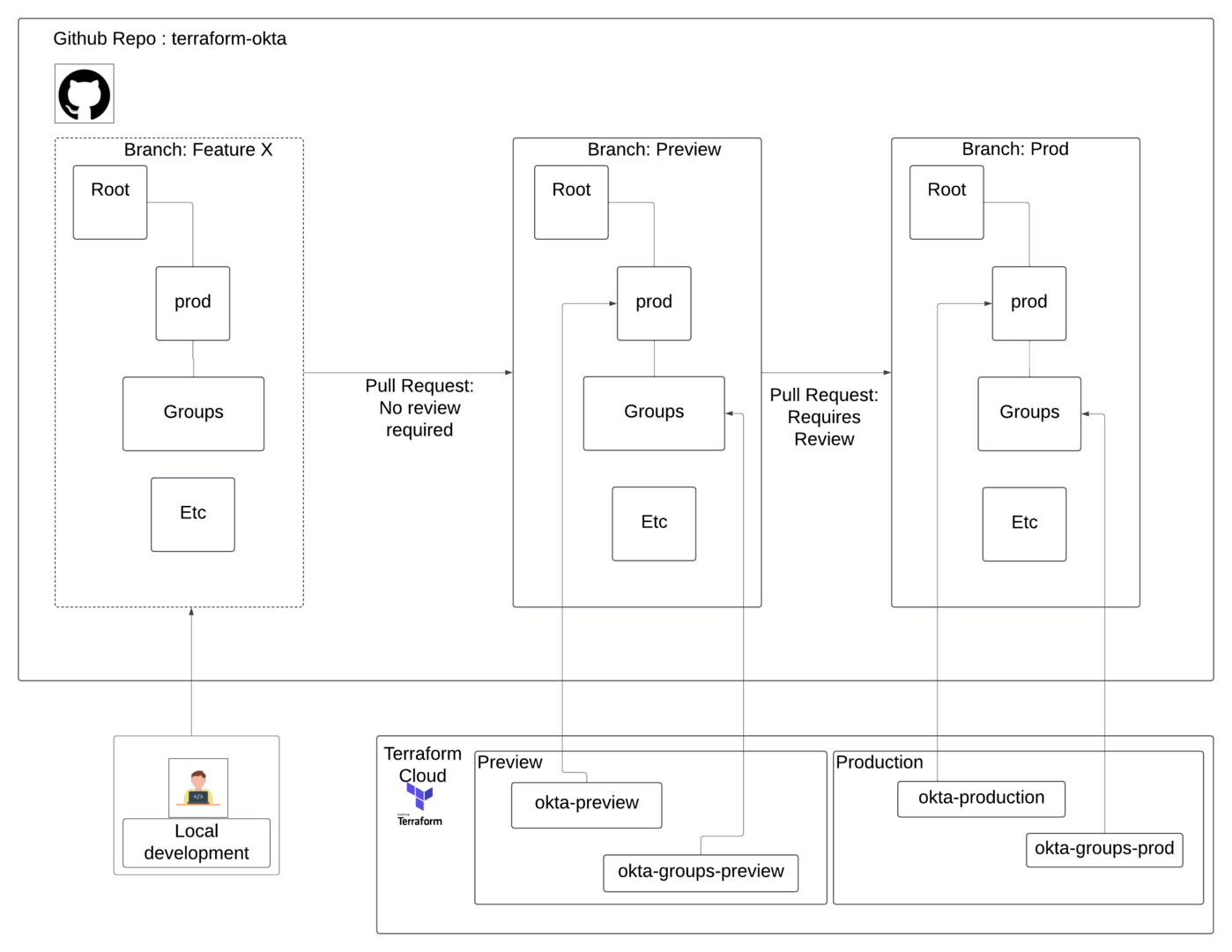

Last week, I went over the historic concept of Terraform and the ideas we have had while planning it out. This week, I will introduce our requirements for introducing Terraform into our environment and segmentation across Github, Terraform, and Okta.

The Implementation

The best thing to do is have a long conversation around the build pipelines, segmentation, and expected results. Utilizing Architectural Decision Records. The concerns that were presented:

- Utilizing multiple GitHub Repos didn’t reduce risk or surface issues; it would just cause unnecessary complexity

- For example, the API key utilization associated with each repo would still have to have a significant API key associated with the “main” repo if we split out several others

- Multiple areas where code would occur could cause drift and conflicting code situations.

- Utilizing “prod” and “preview” folders could cause drift and code alignment issues, especially when having to make “emergency fixes” in the production environment.

- The ability to emergency/break glass, which is a common situation in coding environments, would cause issues longer term in allowing for drift and unwanted changes/configurations. We wanted to prevent this.

- We want to have the ability to allow auto-run in our Terraform Workspaces where needed.

- We need to abide by the EU’s DORA Regulation and, eventually, the US equivalent when they create their requirements.

Workspace Segmentation in Github

Directory Structure

The configuration of the setup that we will use will allow us to utilize a single repo for both Preview and production and allow for multiple terraform workspaces.

|

|

Additionally, we can continue to create folder structures if we need to auto-run them, for example:

|

|

This way, the org can utilize each folder with a separate workspace in Terraform, and we can have self-contained Terraform functions (e.g., those that don’t require dependencies, rely on outputs, or rely on inputs from other information) and auto-run those that are needed. If we do not need auto-run, we can still utilize the production folder for any other necessary items that will be run on demand or when needed.

Feature, Preview, and Main Branches and Protections

With the setup above, we cannot potentially have an individual Preview/staging environment folder, which is what we want. Our preview and production environments should be a direct mirror of each other. Preview receives the configurations first to validate the configuration, changes, and any other setup before it goes to production. Subsequently, we can test new features and services directly against our preview environment.

So, how do we deal with accidental PRs from Feature X > Main? Once we are sure that we have the necessary configurations we need setup and everything is working according to plan, We will create a GitHub Action that does one of two things:

- Auto-close the PR, commenting that it was opened against the wrong base and informing them they need to reopen it into

Preview. - Using Github’s API, we re-adjusted the PR to switch automatically from the

Mainbranch toPreview.

You can see the code below.

|

|

Cleaning up git branches

GitHub allows for the ability to keep branch information clean, by deleting PRs after one has been merged. This generally works great, however, if we will constantly PR preview against main, that will cause preview to be auto deleted.

So let’s disable that feature, and handle it in GitHub Actions. This way, the functionality can also be change managed if we ever need to branch out of it.

So adding to the code above, create another file in .github/workflows and populate it with the following content:

|

|

Workspace Segmentation in Terraform

Importing stock Okta Configurations in Preview and Prod

So, we do not need any wrappers here; we will just be using Terraform natively. Thankfully, Terraform 1.7 added the ability and support for a for_each loop in import resources:

So, how will we need to write or import these for preview and production environments simultaneously, depending on the Terraform Workspace?

Note

The information presented below, where “import_ids” and preview and prod, should be called the equivalent of your Terraform workspace environment name.

|

|

With this setup, we can run the imports once and remove the import blocks afterward once the state has them. Of course, this will vary for every “stock” application, but this should be a relatively good example of how this works for each environment. As previously mentioned, each folder inside the GitHub repo will be linked to a Terraform workspace. In combination with the GitHub branches.

Workspace Segmentation in Okta

Preview vs. Prod Environments

While I think it is a relatively straightforward concept, the conclusion of the above setup allows us to manage.

- Everything runs through our preview environment first.

- We can do release-based setups of our Okta preview and/or production environment in either a CalVer, or SemVer, based notation or a variation of the two.

- We are required to test our environment before pushing to production.

- We provide safety in the configuration.

We now have a terraform configuration that commits Preview and requires a PR for production. We can safely terraform applications and other configurations in our preview environment before pushing them to production.

Local Development

So, how do we develop features for Preview?

When trying to develop features and changes for the preview environment, an individual wouldn’t be able to properly develop against the Okta environment if three people were working or features being worked on simultaneously. The Terraform state would be in a constant state of conflict. This results in each team member needing to have their local environment with Okta, which means setting up Terraform locally for development.

To execute that, we will use override.tf files for variables with them in a .gitignore path, which would allow us to individually specify our environments locally while then being able to run the terraform apply in the cloud.

And that is it

This covers all of the situations we have encountered when trying to convert certain pieces of our Github, Terraform, and Okta environments. I’ll also be publishing a new blog post next week about automatically creating and destroying department groups so be on the lookout.

A lot will be covered over the next several parts, which sums up how we have terraformed certain pieces of our Okta environment. If you have questions and are looking for a community resource, I would heavily recommend reaching out to #okta-terraform on MacAdmins, as I would say at least 30% (note, I made this statistic up) of the organizations using Terraform hang out in this channel. Otherwise, you can always find an alternative unofficial community for assistance or ideas.